You know that sense of dread when you walk into a supermarket that is not your usual one. You arrive with a simple plan, yet the aisles feel unfamiliar and the signs offer only vague clues. A routine errand suddenly turns into a small quest. Now imagine the unexpected relief that follows when, despite being somewhere entirely new, you begin to navigate instinctively. Your brain predicts where the pasta probably is. You sense which area holds the produce. You reach for the shelf that feels like the right place for that specific item you’re looking for. In seconds, the environment becomes readable, almost as if it were designed with your intuition in mind.

That experience is design speaking. Not through instructions or labels, but through an emotional current that guides users before they can consciously process anything. Digital products work the same way. Long before users analyze a layout or evaluate functionality, they form an impression.

Research by the University of Washington showed that a significant portion of visitors judge a website’s credibility based largely on visual design and many users abandon sites when the visual appeal fails to meet expectations.

When Design Matches Human Intuition

Now imagine you step into a kitchen you’ve never been in before. You’re looking for a mug and you find one right away. You can also predict where the light switch is and where the spoons are stored. In moments like these, your intuition does the heavy lifting, and thoughtful design supports it.

This is the type of interaction modern AI systems are beginning to create. Interfaces evolve quickly, content appears before users even request it, and the structure of the experience updates itself based on behavioral patterns that only algorithms can detect.

As these AI driven interactions grow more common, they introduce new emotional dynamics. When an adjustment aligns with a user’s expectations, it can feel almost magical. When it appears at the wrong moment, it can feel oddly intrusive. Similarly, a small misinterpretation can turn a smooth interaction into a confusing one. The challenge is when designers believe they know how people will feel during these moments, but belief is not the same as evidence.

This is where user testing becomes indispensable. Through think aloud sessions, behavioral observations, sentiment rich videos and structured research methods, UX research platforms reveal the reactions that users rarely express directly.

Decades of cognitive psychology research demonstrates that emotional arousal affects how people focus, choose and remember.

We can see these mechanisms in real sessions when participants hesitate, relax their shoulders, lean toward the screen or shift in their seats long before they articulate what they think.

All of this leads to a simple guiding idea: AI is accelerating the way digital experiences evolve. Users bring expectations shaped by every interface they have ever touched. Emotion quietly shapes how those experiences are interpreted. The only reliable way to understand that emotional reality is by watching people interact with what we create.

Emotional design is no longer optional. It is a strategic advantage, a creative responsibility and an essential foundation for trustworthy AI shaped experiences.

Why Emotional Design Matters Today: Humans, Emotion and the Acceleration Brought by AI

Imagine a digital world that keeps getting crowded. New apps and interfaces multiply weekly. AI systems generate layouts, messages and suggestions at neck-breaking speed. In the middle of all this activity, users are not evaluating every detail with calm analysis. They are simply trying to process how a digital space makes them feel, in order to decide whether to stay or walk away – within seconds.

This is where emotional design takes its place. The human brain processes visual impressions far more quickly than text. Studies in visual cognition indicate that the brain can process certain visual features rapidly, such as contrast, motion or basic shapes. Some research from institutions like MIT support that visual perception relies on fast, automatic processes.

Users sense tone, structure and intent before they consciously read a line of text. Their emotional response becomes the first signal.

Artificial intelligence adds complexity to this dynamic. AI systems do not simply present information, but personalize it. They then adapt quickly to patterns and generate content that feels surprisingly specific. This specificity raises the stakes for emotional design.

How People Think

Consider the experience of landing on a website that seems to anticipate your interests. Maybe it highlights a category you often browse or offers a suggestion that makes sense based on your recent behavior.

Psychologists studying decision making, including Daniel Kahneman, have shown that people often rely on fast intuitive judgments, commonly known as System 1 thinking. Research on processing fluency also suggests that when an interface aligns with these intuitive expectations, interaction feels easier. When the experience disrupts that fluency, users sense the tension even if they cannot verbalize why.

At this point, the combination of emotion and AI becomes essential. As AI tools generate more of the designs, emotional quality becomes paramount, since a ‘good’ or appealing appearance does not guarantee alignment with human intuition’.

In short, emotional resonance requires more than pattern matching. It requires an understanding of why a pattern matters.

A simple example illustrates this shift. Think of an AI powered shopping assistant that rearranges recommendations in real time. When its suggestions align with what the user has in mind, the interaction feels smooth and supportive. When the system misinterprets the intention, the moment shifts. The user may not feel annoyed, but there is a small drop in confidence. Research on digital experiences has shown that even subtle mismatches in automated suggestions or interface behavior can reduce trust and make interactions feel less dependable.

The speed of AI has not made human intuition less important, but more visible.

Every interaction becomes a micro-moment where the user is subconsciously asking: does this feel right? Followed by an emotional reaction long before it becomes logical.

The Hidden Brain at Play: How Emotion Keeps Shaping the Experience While Users Explore

Once the first impression settles, the mind keeps working quietly in the background. Users may believe they are simply navigating an interface, yet a deeper emotional process begins to unfold.

Neuroscientist Antonio Damasio describes these early reactions as somatic markers. These bodily signals are learned through past experience and guide decisions before conscious reasoning takes over. A shift in posture, a tiny spark of curiosity or a moment of hesitation can influence a user’s interpretation of the experience.

Imagine someone interacting with a new feature. They tap, scroll and glance around, but beneath the surface their brain is predicting what should happen next. Psychologist Lisa Feldman Barrett explains that the brain constantly anticipates outcomes based on past experiences. When an interface aligns with those predictions, users move through it with ease. When something feels unfamiliar, the body sends quiet alerts, signaling extra attention is needed.

Research in cognitive psychology shows that clarity improves processing fluency and supports smoother interaction, while uncertainty increases cognitive effort and slows behavior. These shifts can appear as subtle microexpressions. Psychologist and leading expert on facial expressions, emotions, and deception, Paul Ekman, demonstrated that brief facial movements often reveal emotions people do not express verbally.

A raised eyebrow or a short pause can say more about a user’s experience than a full sentence.

Now, let’s consider how AI interprets these patterns. Modern systems can detect behavioral patterns such as interaction rhythms, hesitation points and clusters of actions that reveal subtle emotional responses. Neuroscientist Joseph LeDoux has shown that emotional circuits activate based on the significance the brain assigns to a stimulus rather than its objective properties.

AI tools mirror this idea when they highlight patterns that suggest confusion or engagement. AI may not know the reason behind the behavior (yet), but they can point teams toward moments that deserve attention.

Emotion, The Quiet Partner that Shapes Interactions

These emotional micro signals also influence what users remember. Studies in affective science show that emotionally charged moments are easier to recall than neutral ones. A quick spark of delight or a moment of confusion can change how someone talks about a product later. Often, the story they remember is shaped by how the experience made them feel, not by the specific steps they followed.

As interfaces become more adaptive through AI, these emotional undercurrents gain more relevance. User interfaces (UIs) evolve while the user is still exploring, and throughout that process the brain continues to build meaning in real time.

Emotion is not a layer added to design. It’s the quiet partner shaping every moment of interaction.

Designers and researchers who understand this process can create experiences that feel genuinely intuitive.

Rethinking Emotional Design: The Experience Constellation

Designers often describe experiences as a sequence of screens or steps. Users, on the other hand, live them as a constellation of signals that appear all at once.

These signals work like stars that shape the emotional atmosphere. Some shine brightly, while others operate quietly in the background. Together they shape the sense of direction inside the experience.

Now imagine a person is trying out a new digital product. They absorb the first impression, and almost instantly their attention moves toward the next cue that feels meaningful. It might be a button that communicates confidence, a motion pattern that feels familiar or a tone of voice that sounds warm. None of these elements waits their turn. Instead, they coexist.

Leading UX expert, Don Norman has long described emotional responses as operating on multiple levels, from rapid visceral reactions to reflective interpretation. Modern interfaces simply add more cues that influence these early impressions.

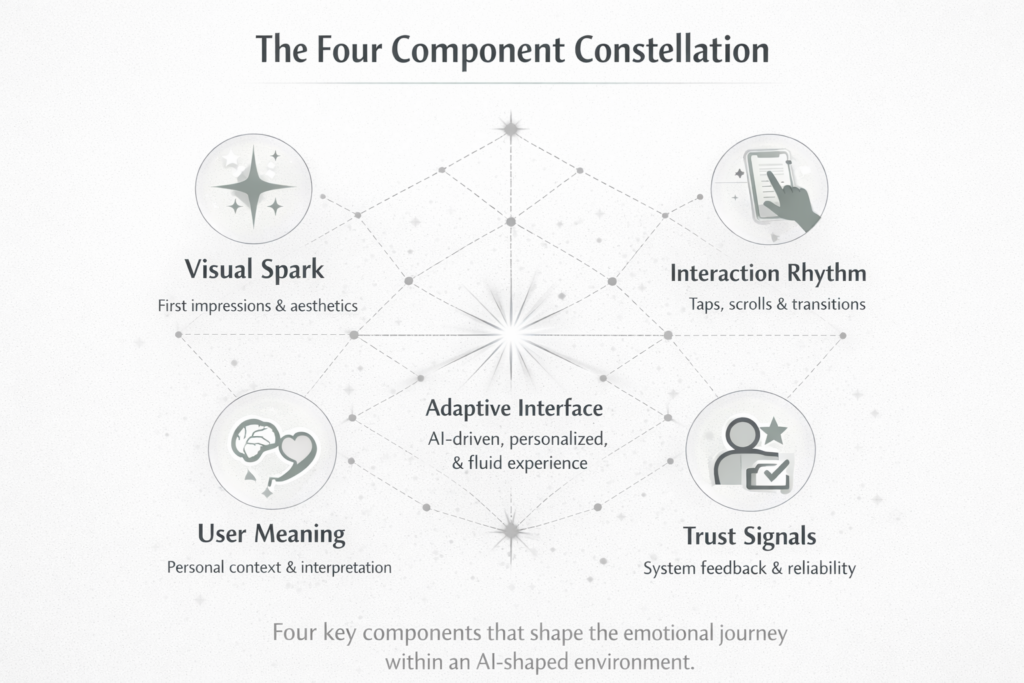

The Four Component Constellation

To make this easier to visualize, picture four key components that typically shape an emotional journey.

- The first is the initial connection when the interface appears.

- The second is the interaction rhythm set by taps, scrolls and transitions.

- The third is the trust that builds through subtle confirmations that the system understands the user’s intention.

- The fourth is the meaning each person brings from their own context, a core idea in the work of affective scientists like Lisa Feldman Barrett, who, as mentioned earlier, describes emotions as constructed rather than automatically triggered.

Now imagine this constellation inside an AI-shaped environment. The visual spark can shift from one person to another because layouts adapt. The interaction rhythm evolves as behavioral predictions influence the flow. Trust becomes dynamic when the system reacts and anticipates. Meaning adjusts in real time as users respond to new elements on the screen.

Emotional design becomes a moving system in this setting. It responds, adapts and creates new paths depending on each user’s actions. A feature that feels intuitive to one person might feel unfamiliar to someone else because the interface changes based on prediction.

In this sense, user testing provides teams the ability to map out the ‘constellation.’ In moderated sessions, researchers see the emotional temperature shift across different moments and in this way they gather clarity, trust and motivation. These observations are consistent with findings in affective computing, a field advanced by researchers such as Rosalind Picard, who has explored how technology can detect and interpret emotional patterns.

The goal is not to create constant delight. The real aim is to understand how each emotional cue fits within the whole experience.

When teams can see the constellation clearly, they identify which elements guide the user and which ones create confusion.

Emotional design becomes the craft of orchestrating adaptive experiences that stay coherent, so AI-driven changes make users feel guided, not confused.

Emotional Listening: How User Testing Reveals What Interfaces Don’t Say Out Loud

Users don’t just complete tasks. They react, interpret and form tiny internal narratives that shape their perception of the product. User testing is the moment where those narratives come to light.

Imagine sitting in on a session where a participant explores a new feature: They lean forward slightly, they pause for a breath before deciding what to click, heir tone brightens when something feels clear, and so on. None of this appears in analytics dashboards. Rather, these are emotional signals that operate between intention and action.

Ekman’s decades of work have shown that microexpressions and subtle behavioral shifts reveal genuine emotional processing even when users don’t put their reactions into words.

Unmoderated think aloud sessions are especially revealing. A participant might say something as simple as “I think this is where I should go” with a small hesitation in their voice. That hesitation signals a break in confidence. Researchers observe the gap between what the user says and how they behave. This combination offers a more accurate view of their emotional experience.

AI-enhanced platforms add another dimension to this listening process. Machine learning can highlight behavioral clusters that appear across participants. A brief pause that seems minor in one session becomes meaningful when it reappears in 20 more. The field of affective computing (shaped by researchers like Rosalind Picard at MIT) supports the idea that technology can detect emotional patterns that are too subtle for humans to label consistently.

Consider a moment during a test where the system offers a recommendation. If the suggestion aligns with what the user wants, the flow continues without friction. If the suggestion feels slightly off, the user’s rhythm changes. Not significantly, but enough to hint at an emotional shift. These are the moments where trust is shaped. Research shows that users often evaluate AI features through perceived ease and emotional comfort.

User testing also clarifies how emotion guides memory.sers often remember how an experience made them feel rather than what they clicked or in which order they reached the goal.

When teams learn to listen at this emotional level, the experience becomes clearer. They see which interactions carry the most weight, which cues help users orient themselves and which micro moments reshape trust. Emotional listening turns user testing into guidance that no algorithm or static metric can replace.

And the more adaptive interfaces become through AI, the more important this listening becomes. Emotional cues help teams understand what the system is communicating unintentionally. They reveal the stories users create in their minds and the meaning they construct as the interface responds to them. This understanding gives teams the confidence to refine experiences until they feel genuinely human.

Predictability and trust can outweigh pure accuracy when the experience feels smooth.

It’s no wonder some UX/UI teams call themselves “computer psychologists”!

Expert Perspectives: How Leading Voices are Shaping the Future of Emotional Design

Every discipline evolves through the people who challenge its assumptions, and the field of emotional design is no exception.

Antonio Damasio’s research on somatic markers revealed how bodily sensations influence the choices people make, even in seemingly simple tasks. This insight shifts how teams think about interaction design. A smooth flow is not only efficient. It also reduces the cognitive load that can trigger uncertainty. When users feel grounded, the interface becomes easier to navigate.

Another influential perspective comes from Barrett, whose theory of constructed emotion reminds us that users do not arrive with a single universal interpretation. They bring their own histories and expectations, which shape how they understand what they see. For product teams working with global audiences, this reinforces the need to observe emotional reactions across cultures. A cue that feels inviting in one region can feel overly casual or confusing in another.

Ekman’s work adds another layer to the conversation. In moderated testing, facial expressions help researchers understand moments of uncertainty or confidence. They also highlight where AI driven features need clearer framing.

AI researchers contribute an essential perspective as well. As mentioned before, Rosalind Picard’s work in affective computing shows how technology can interpret emotional signals through behavior, voice, and interaction patterns.

Their findings emphasize the idea that emotional coherence is just as important as functional clarity.

Together, these perspectives create a more nuanced view of emotional design. They encourage teams to see the experience not as a sequence of interactions, but as an evolving relationship between user and system.

Each of these voices pushes the field toward a more sophisticated understanding of emotion, presented as a core structure of human interpretation.

For design teams working in an AI shaped world, these insights help build products that stay emotionally coherent even as they adapt.

AI and Emotional Design: The New Frontier of Interaction

AI is reshaping how people experience digital products, and interfaces no longer behave like static layouts. These changes bring new emotional dynamics that teams need to understand if they want their products to feel grounded and trustworthy.

Let’s picture an adaptive interface that tailors its layout based on recent behavior. A subtle rearrangement can feel smooth when it aligns with the user’s intention.

Research from Stanford’s Human-Centered AI Institute shows that people rely on emotional cues to assess whether an intelligent system understands them.

The emotional tone of each adjustment from an AI becomes part of the user’s relationship with the product.

Artificial Intelligence also influences the rhythm of interactions. When a recommendation appears at the right moment, the experience feels fluid. When it appears too early or too late, the user interprets the suggestion as noise rather than help. Interaction scientists like Clifford Nass demonstrated that individuals apply social expectations to machines, responding to timing, coordination and responsiveness much as they do in human interactions.

A mismatch in rhythm is often read as a mismatch in understanding.

Another shift comes from the growing presence of conversational agents. These systems create a different emotional landscape because people naturally assign personality traits to them.

AI also brings opportunities to deepen emotional insight. Machine learning models can detect recurring hesitation points or behavioral clusters across sessions, revealing moments where the interface feels ambiguous.

As AI-shaped experiences become more common, emotional design grows more complex. Interfaces gain the ability to respond dynamically, which means the emotional meaning of an interaction can change as the system adapts.

Emotional design in the age of AI should not be about creating constant delight. Rather, the new challenge is to craft environments where intelligent systems behave in ways that feel coherent, respectful and easy to interpret. When teams understand the emotional impact of each adaptive moment, they can create products that feel stable even as they evolve.

The Ethical Edge: Designing Emotion with Responsibility in an AI-Shaped Landscape

AI brings new emotional dynamics into digital experiences, which turns emotional design into an ethical practice as much as a creative one.

Users react not only to what a system shows, but to what they sense the system understands about them. That perception influences trust, comfort and the feeling of safety inside any interaction.

Personalization is one area that demands attention because it requires teams to decide how far an adaptive system should go in interpreting and acting on user intent. Adaptive features can feel genuinely helpful when they align with a user’s goals and context, yet overly specific suggestions can trigger unease by signaling that the system “knows too much.” Reactions to AI-driven personalization can also vary across cultures and age groups, which makes diverse testing essential to avoid emotional overreach and unintended boundary violations.

That same responsibility extends naturally into emotion inference, since it raises the stakes of what the system claims to understand about the user. Models that interpret tone, facial expressions, or behavior can help teams detect ambiguity and respond with more care, but they also risk misreading users and acting on assumptions. For that reason, emotion inference should be limited to clear use cases, paired with transparency about what signals are being used and why, and designed so users can understand, contest, or opt out of emotionally loaded interpretations.

As interfaces adapt in real time, users still need stable anchors to stay oriented. Adaptive interfaces benefit from consistent cues, predictable interaction patterns, and a tone that remains coherent even as content and recommendations shift. Emotional design supports this by preserving continuity, helping changes feel guided rather than surprising, and reducing the cognitive cost of re-learning the system.

User testing becomes the safeguard across all these dimensions. It reveals when personalization feels intrusive, when emotion inference feels presumptive or opaque, and when dynamic behavior causes confusion. Evaluating emotional reactions across varied demographics helps teams avoid one-dimensional assumptions and design adaptive products that remain effective while respecting users’ boundaries.

Ethical emotional design is not a limitation. It clarifies how AI-driven experiences should behave to remain trustworthy, transparent and emotionally coherent.

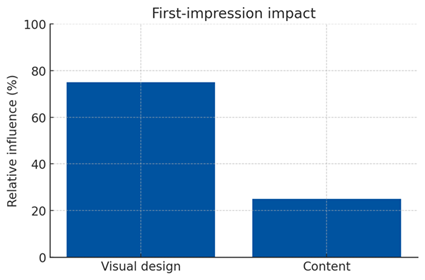

Chart Insights

1. First-Impression Impact

Visual design accounts for most first-impression judgments, often reported in the 46–75% range.

Source: Stanford Web Credibility Project, (2006)

Key points:

- Around three quarters of users form credibility impressions based largely on visual design

- Most early judgments occur within seconds.

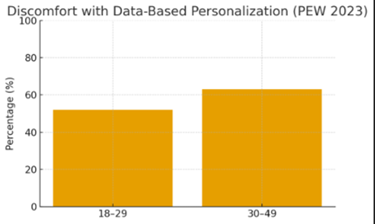

2. Discomfort with Data-Based Personalization by Age

Percentage of U.S. adults who report feeling uncomfortable when companies use personal data to personalize content or advertising.

Source: Pew Research Center. (2023). How Americans Think About Data and Personalization.

Key points:

- Sensitivity to personalization increases with age.

- Younger adults (18–29) are more tolerant to algorithmic personalization.

- Adults in the 30–49 range show significantly higher discomfort, aligning with broader concerns about data privacy, tracking, and targeted content.

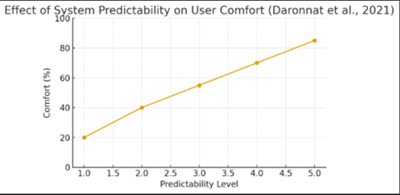

3. Effect of System Predictability on User Comfort

Source: Daronnat et al. (2021)

Key points:

- Higher predictability consistently increases user trust and comfort.

- Stable, coherent system cues reduce uncertainty and make interactions feel safer.

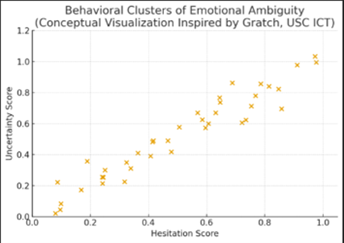

4. Emotional Cues in Interaction Behavior

Based on research from Jonathan Gratch and the USC Institute for Creative Technologies, behavioral patterns such as hesitation, pauses or repeated movement sequences can signal emotional ambiguity. His work shows that clusters of micro-behaviors often predict moments of confusion or heightened engagement.

Source: Work from Jonathan Gratch, USC Institute for Creative Technologies (2023)

Key points:

- Repeated hesitation patterns can signal emotional ambiguity, according to affective computing research by Jonathan Gratch at USC ICT.

- Clusters of interaction behaviors have been shown to predict moments of confusion or heightened engagement in user-AI interactions.

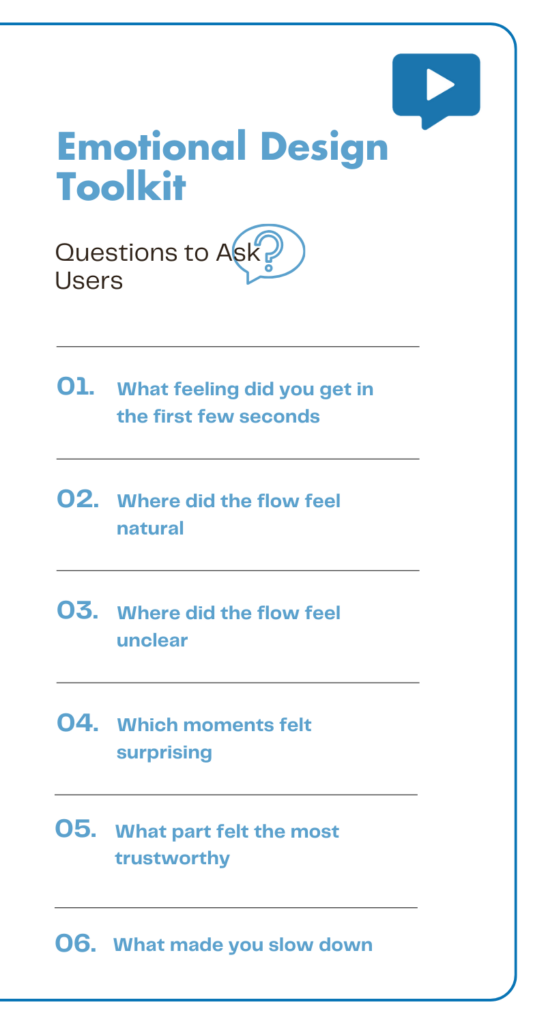

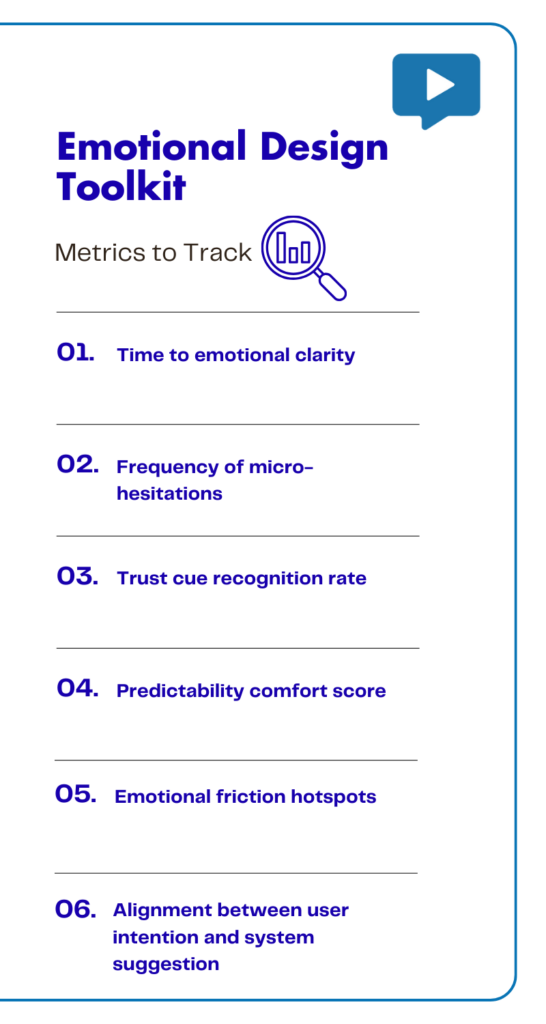

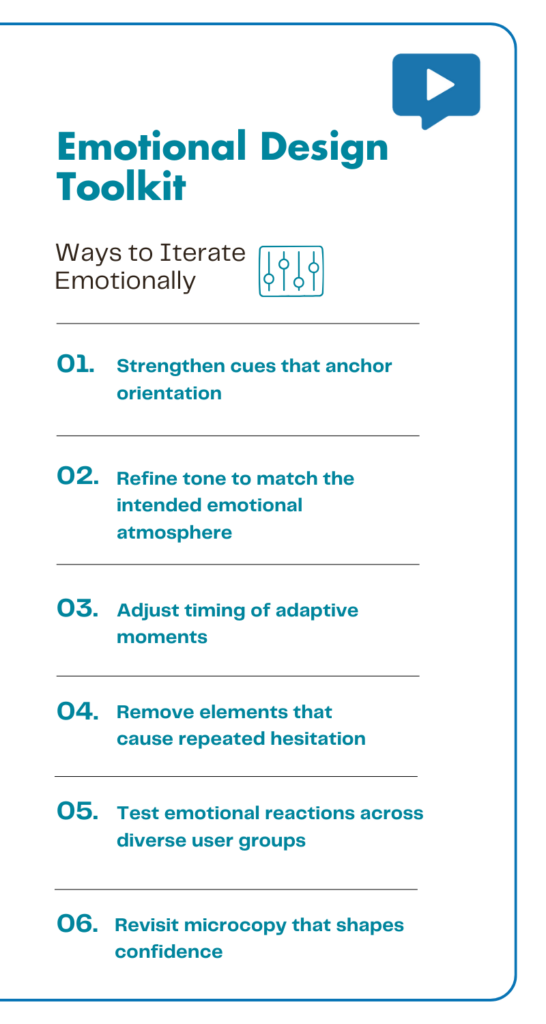

Emotional Design Toolkit: A ‘Monday-Morning’ Toolkit for Product Teams

*This toolkit synthesizes insights from affective computing, behavioral psychology and human–computer interaction. It draws on foundational research from MIT Affective Computing, Paul Ekman, Stanford’s work on social responses to technology and contemporary studies on predictability and trust in adaptive systems.

In Conclusion

Emotional design has always guided how people understand digital experiences. What changes now is the speed and complexity introduced by AI. Interfaces learn, adapt and evolve during the interaction, which means the emotional atmosphere of a product is never static. It shifts in real time, just like the users who move through it.

Teams must listen closely to these emotional signals to gain a clearer view of how their products behave. User testing reveals the quiet reactions that shape trust and meaning. AI helps uncover patterns across many sessions. Together, they create a more honest picture of the experience.

Curious how user testing can transform your product? Request a free demo and explore what emotional clarity feels like.

FAQ